For carriers and businesses that utilize telecommunications

Cross-Industry Solutions

An event-driven system development and application execution platform that performs distributed processing of large amounts of data at high-speed and is suitable for mission-critical systems and IoT

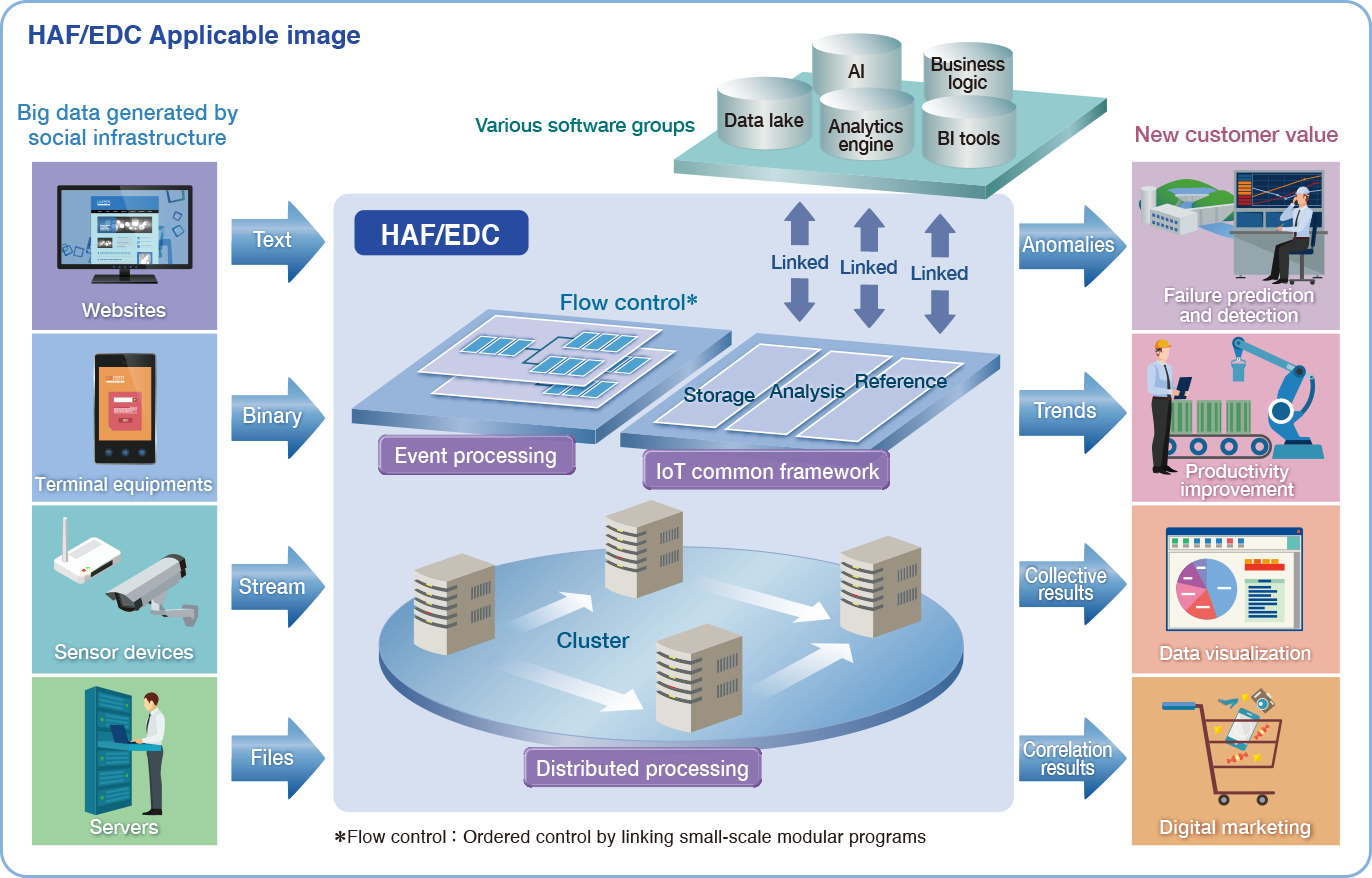

Hitachi Application Framework/Event-Driven Computing (HAF/EDC) is an event-driven system development platform and application execution platform that was created for the purpose of high-speed processing of large volumes of data in a distributed environment. In addition to its existing application in various mission-critical systems, the scope of application will also be expanded to IoT systems in the future.

The system that applies HAF/EDC processes data on an event-by-event basis, allowing for quick development of complex applications while providing flexible scalability through distributed processing. It is a development and execution platform suitable for IoT systems, allowing for hypothesis validation with a small start and scalability based on data volume.

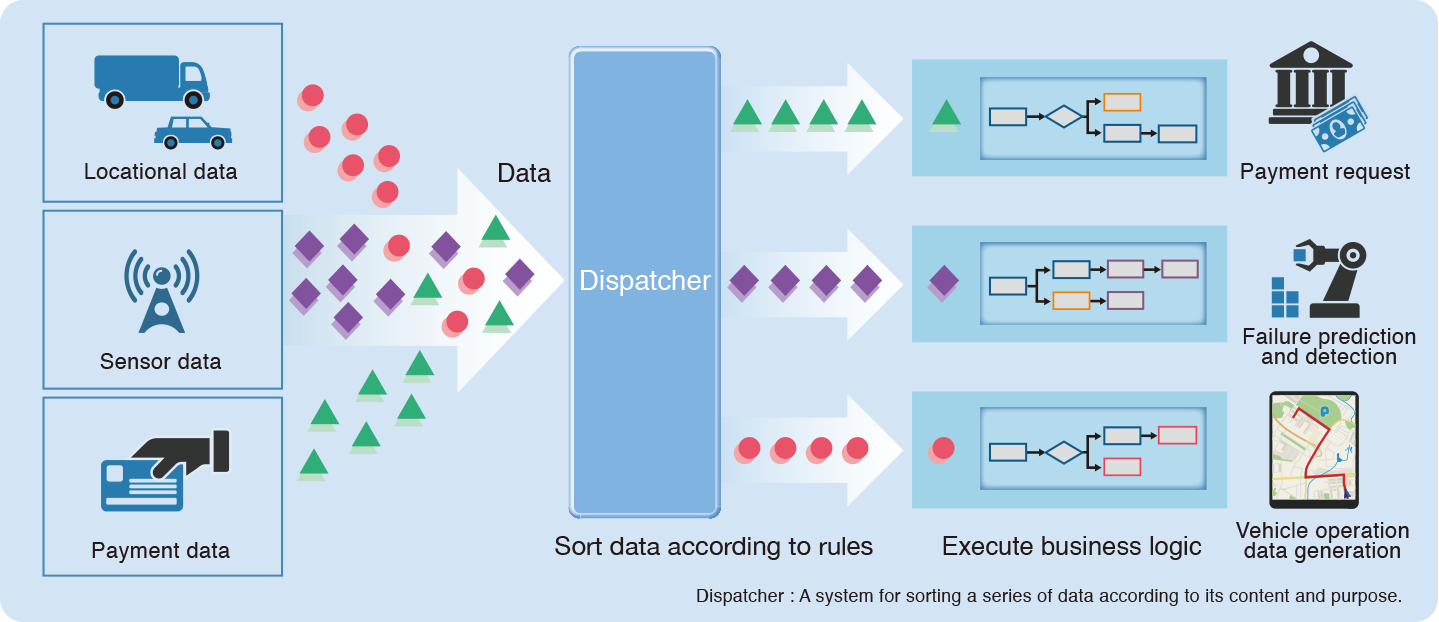

Event-driven computing (EDC) is a processing model in which an event such as the arrival of data (any of a wide variety of data such as locational data, sensor data, and payment data) triggers the execution of the appropriate business logic corresponding to that data. Since it is easy to follow the addition of data types, EDC is suitable for IoT system design and development.

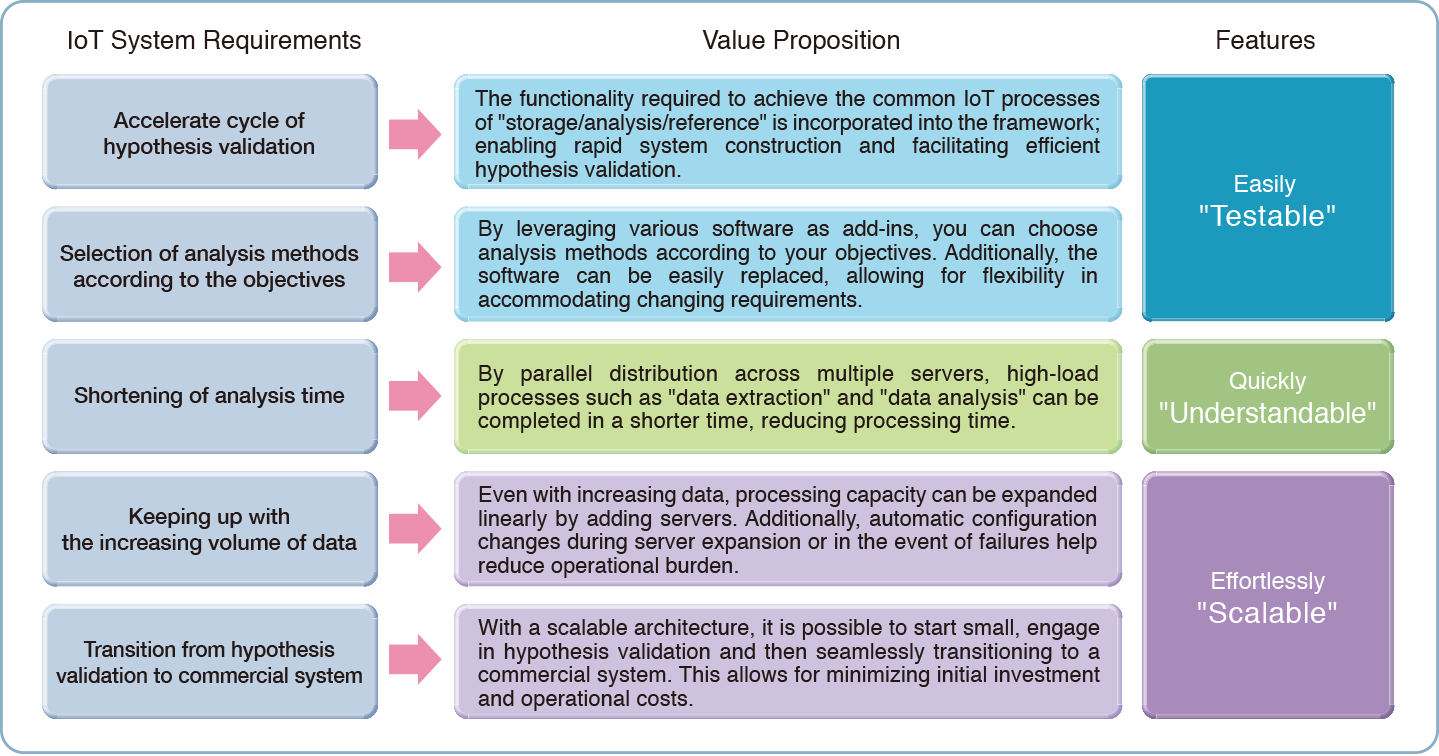

HAF/EDC is a framework based on Hitachi's proven event-driven distributed processing technology. Leveraging the following three features, HAF/EDC provides a diverse range of value required for IoT system development.

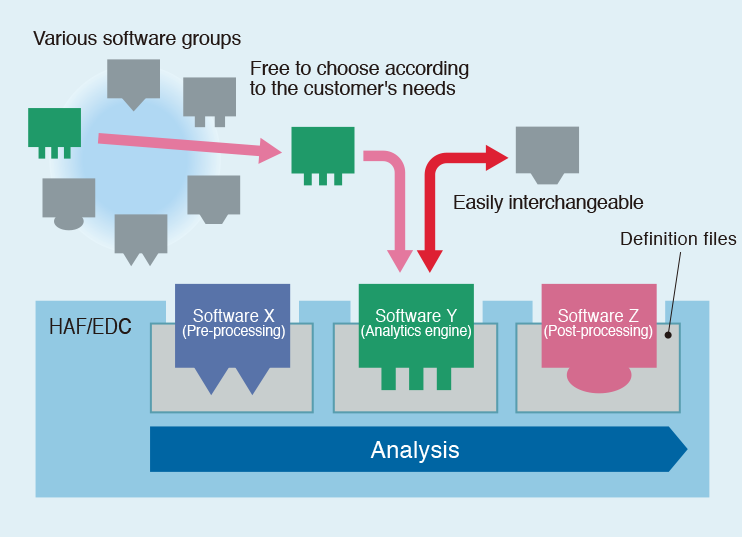

HAF/EDC provides a framework for processes such as "storage / analysis / retrieval" in IoT and provides an execution platform for integrating various software required for data storage and analysis. Based on the concept of "minimizing custom development", HAF/EDC enables systems to be built in a short period of time while flexibly incorporating changes in business requirements.

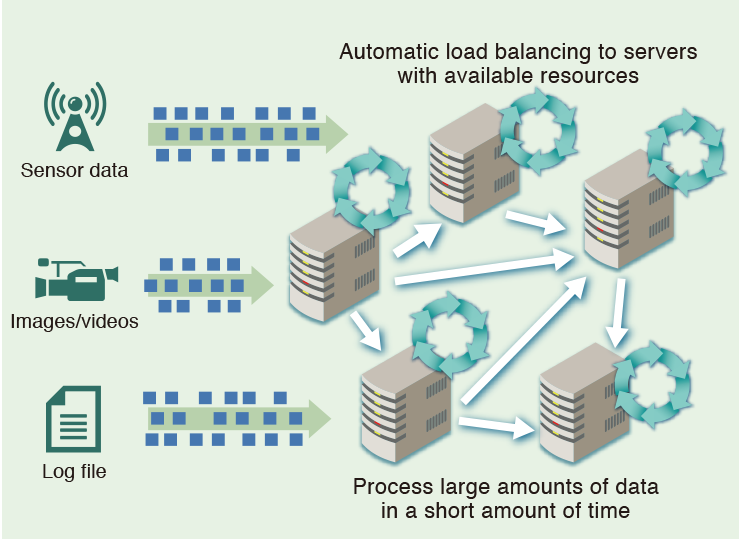

HAF/EDC uses large amounts of data and AI to perform high-load analysis and efficiently distributes the processes across multiple servers. By automatically load balancing the received data to servers with available resources, processing time can be shortened. With immediate access to analysis and validation results, it accelerates the value realization of data and the creation of new services.

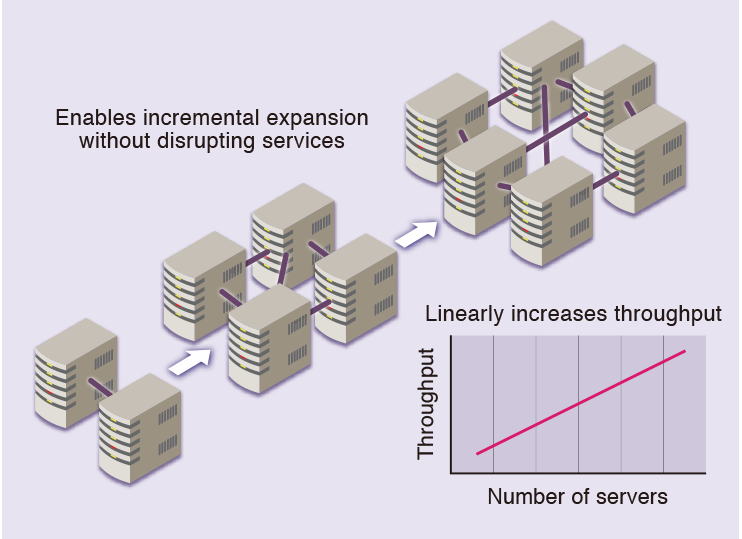

Even when the data volume expands, it is possible to easily improve the processing capacity of the system by adding servers without interrupting the service. With an architecture that automatically adjusts configurations, this allows for hypothesis validation at a small scale and smooth transition into a commercial system, thus minimizing initial investment and operational costs.

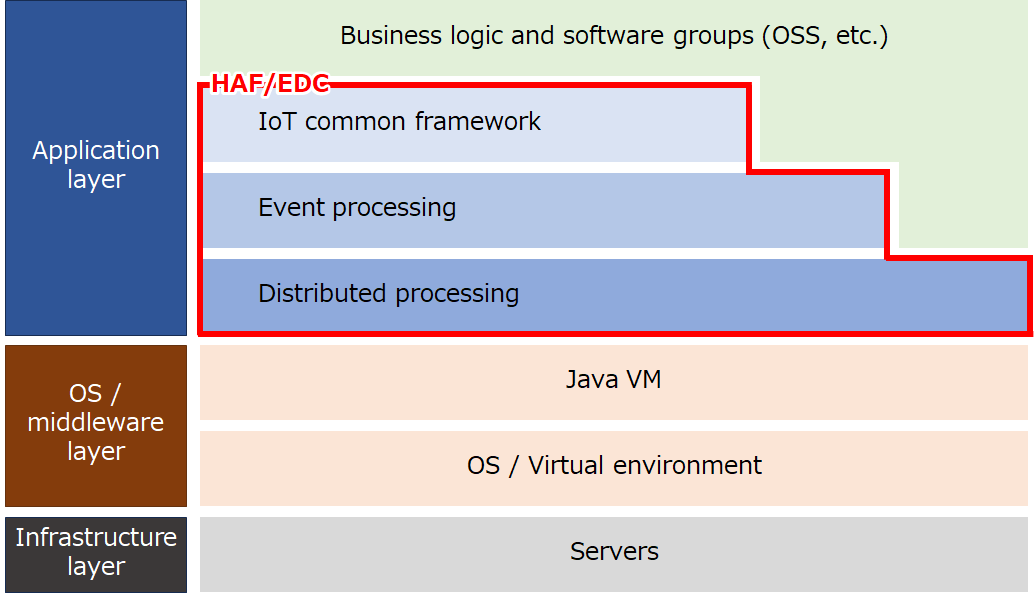

HAF/EDC is an application execution platform that operates on the Java Virtual Machine (Java VM). It is a framework equipped with functionalities commonly required in distributed processing systems, consisting of core functions such as "distributed processing," "event handling," and "IoT common framework."