Using a conversational agent with a humanizing effect for smoother communication between people and AI, with the aim of realizing a comfortable and prosperous society

November 6, 2018

Hitachi, in cooperation with the National Institute for Physiological Sciences and Shibaura Institute of Technology, has jointly developed technology that mimics expression of human emotion as part of research to increase the acceptance of artificial intelligence ("AI") in everyday life. The technology uses a conversational agent ("agent") which "reads" emotions expressed by humans such as "joy" or "sadness," and "expresses the same emotion" through a computer graphic ("CG") character. Brain science was used to confirm that the human user perceived the response by the AI through the CG in the same way as that by a human (humanizing AI). By ensuring smooth communication between humans and AI, Hitachi hopes to contribute to the realization of a comfortable and prosperous society using AI.

Much attention is being focused on AI as a means to realize new services and functions that will make life more convenient and prosperous for humans. Its range of application is extending to cover many areas such as marketing and sales, medicine, welfare, education. The development of technology “close” to people is key to promoting digitalization. In the same way, the interface with humans is important to increase the diffusion of AI in society. In communication with agents, it is important that the human user believes that the agent has the same emotions as humans and is showing empathy (ability to humanize AI). For example, in the care of an elderly person living alone in an ultra-aged society, it is necessary for the user to believe that the agent is affectionate.

In human to human communication, if the other party reads the emotional expression of a person, and returns the same expression, that person feels that the other party is empathizing with them. With past agents, however, it was known that even if the same response was provided, a person’s brain activity would differ depending on whether they were told that a human was operating the agent as opposed to AI. Thus, it was considered a challenge to realize the same quality of communication between a human and agent, as might be between humans.

Research was conducted to verify a hypothesis that if agents can mimic human emotional expression, that the human counterpart would feel as though the agent actually had similar emotions to humans. Technology to identify emotions from human emotional expression, and technology to express that identified emotion, were combined to develop interactive technology. Using this interactive technology, the agent was able to successfully imitate expressed human emotions (Figure 1). More precisely, when "Piyota,"*3 the CG character of the agent, expressed joy after the human communicator smiled, the human communicator believed that Piyota “had” emotions although they knew that the agent was being operated by AI. This facilitated smooth conversation between Piyota and the human.

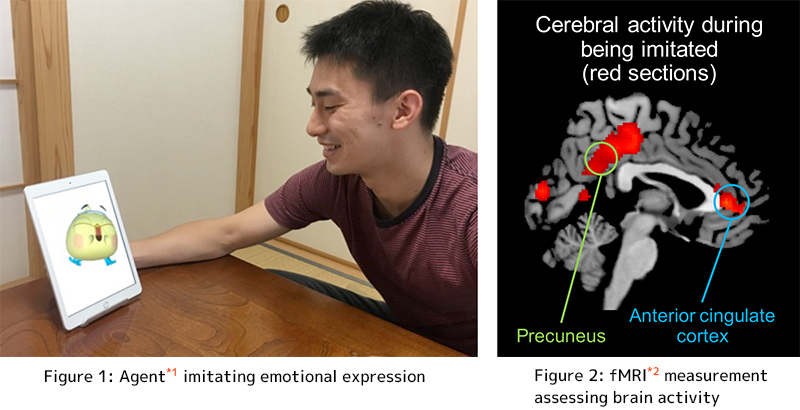

To objectively verify this AI humanizing effect, fMRI was used to measure the brain activity of human subjects when they saw Piyota express joy in response to their smiles (Figure 2). Results indicated that no difference in brain activity was observed between situations where the human subject believed that Piyota was being operated by a human or AI. Furthermore, a questionnaire conducted during the experiment showed a response of “positive feeling” by the human subjects even though they realized that AI was operating Piyota, providing subjective assessment of effect.

Hitachi will continue development of agents that act as a go-between humans and AI, to bring AI closer to humans, and to work towards the realization of a society in which people can live in comfort and prosperity.

These results were presented at Neuroscience 2018*4 which was held in San Diego, U.S.A., on November 4, 2018.

The starting point for this research was the idea that empathy could be generated by mimicking positive emotional expressions. This led to the combination of technology that identifies people’s various expressions in real time, and technology that expresses the identified emotions through a CG character (Piyota, Figure 3). Based on this, we created a new interactive technology that generates various expressions from imitating emotional expressions. While past research utilized a human-type CG character, we developed Piyota is a little bird CG that can realize natural and diverse emotional expressions to induce greater acceptance through its dynamic emotional. Using the technology, the agent identifies the type and degree of users’ expressions, which Piyota then imitates. Even if the user knows that the agent is being operated by AI, when Piyota expresses the same emotion, e.g. happiness, in coordination with a user’s smile, users feel as though the agent is showing empathy in communication.

Figure 3: CG character “Piyota” expressing various emotions

To verify this, as well as objectively evaluate the effect of users’ belief that the agent was operated by AI, fMRI was used to observe brain activity of subjects (39 users) in addition to a questionnaire.

First, we prepared an environment where users could not know whether the agent was operated by a human or AI. We then informed the user when “the agent is being operated by humans” and when "the agent was being operated by AI." In reality, the agent was operated by AI in both situations. We then looked at the cerebral activity to compare the results from two different situations.

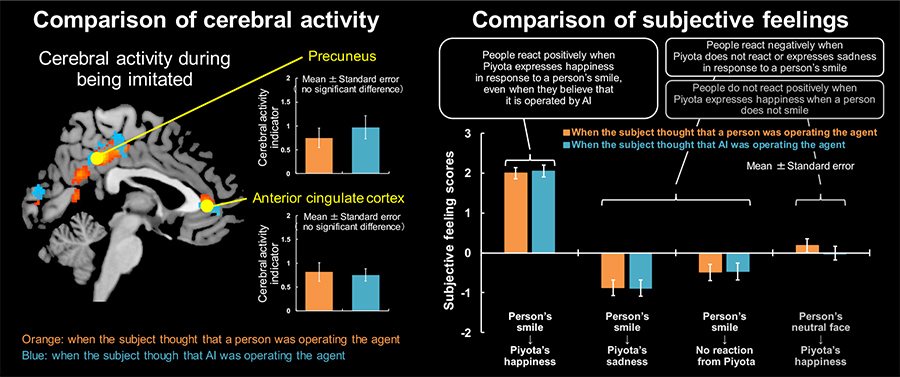

From the fMRI measurements, we observed activity in the anterior cingulate cortex and precuneus within the brain (Figure 2). It was noted that there was no significant difference in cerebral activity between when the users were informed that Piyota was operated by a human and by AI. (Figure 4, left). Through the questionnaire, we found that people expressed a positive feeling when Piyota expressed happiness in response to their smiles, and that there was no significant difference in scoring between when a person or AI was said to be operating Piyota (Figure 4, right). From these results, we confirmed that by imitating positive expression of emotion, users felt that the agent was interacting with them in the same way as a human despite knowing that it was operated by AI and verified success in bringing "AI closer to people."

Figure 4: Cerebral activity during imitation of emotional expression under human and AI control (left), comparison results of subjective feelings (right)

For more information, use the enquiry form below to contact the Research & Development Group, Hitachi, Ltd. Please make sure to include the title of the article.