Flexible and stable motions, even in case of obstructions in robot field of vision or unexpected force, demonstrated in chair assembly task

February 21, 2024

Hitachi, aiming to promote automation of social infrastructure assembly and maintenance tasks, has developed technology for real-time switching of the importance (attention level) of multimodal*1 sensor information such as vision and tactile force sense*2 during work, based on the type of work and environment.

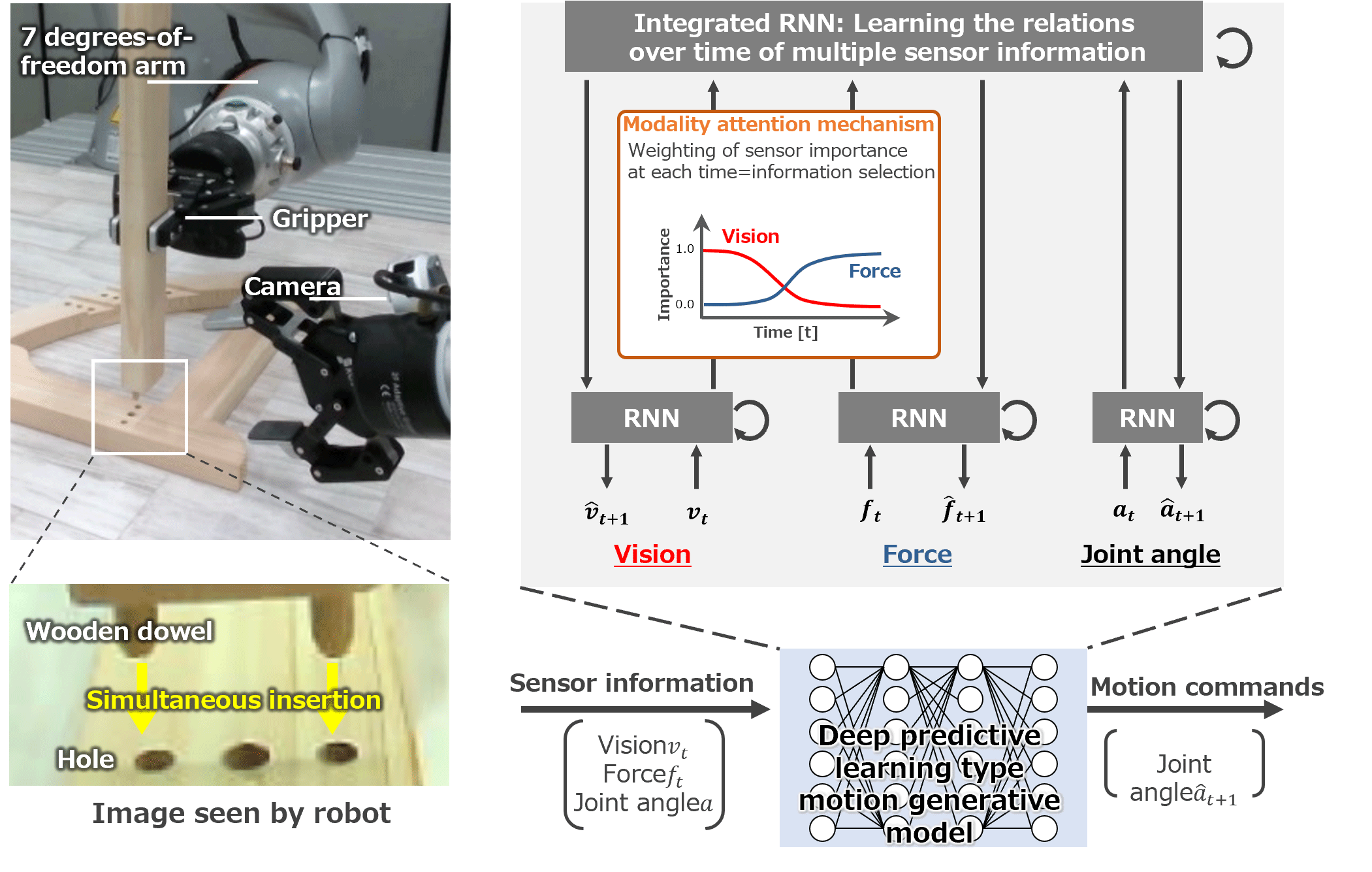

Previously, Hitachi in collaboration with the research group of Tetsuya Ogata, Professor in the Faculty of Science and Engineering of Waseda University, developed a deep predictive learning robot control technology, capable of real-time decision-making and performance of actions even when changes occur in the work to be performed or the environment.*3 The technology developed by Hitachi this time is aimed at automating difficult tasks such as inserting parts within a narrow space, or tasks involving large parts in which blind spots occur. Based on the observation that workers tend to rely on tactile force sense when encountering visual constraints, this technology switches in real time the attention paid to (importance of) multimodal sensor information for vision, force, and other senses with changes in the nature of the work being performed or the environment (Figure 1). As demonstrated in a chair assembly task, this technology enables flexible and stable work even in the case of unanticipated events, such as obstructions occurring in the robot field of vision, or application of a force that did not exist in the planning stage.

Applying this technology, solutions will be developed that assist with a range of assembly and maintenance work, from planning to execution, as initiatives are accelerated toward solving labor shortages in the social infrastructure area.

These results were announced in part at the 2023 International Conference on Robotics and Automation (ICRA 2023) and published in IEEE Robotics and Automation Letters.

For learning robot motions, this research used the AI Bridging Cloud Infrastructure (ABCI) of the National Institute of Advanced Industrial Science and Technology (AIST).

Figure 1: Technology for real-time switching of the attention paid by a robot to vision and force sensor information based on the work to be performed and the environment

(Humans simply teach the robot multiple times the work to be performed. The robot learns the importance of sensor information, and can perform the work by focusing on specific sensor information based on the nature of the work and the environment.)

For more information, use the enquiry form below to contact the Research & Development Group, Hitachi, Ltd. Please make sure to include the title of the article.