What should future distribution warehouses be like? This is the question that has given birth to Hitachi's "logistics cockpit."

The logistics cockpit combines the analyses of various data for a warehouse, conducted by using artificial intelligence (AI), and video images from cameras installed in the warehouse. The cockpit displays the combined results in an easy-to-understand manner to support decision-making by professionals on site.

In this interview article, the researcher in charge, who has reportedly elaborated on the impact and fun the new technology gives to users, describes episodes during the development of the logistics cockpit.

UTSUGI Kei

Senior Researcher

(Publication: December 14, 2016)

UTSUGIStore operations are becoming more sophisticated and service offerings including online sales are further enhanced. Along with this trend, the products stored in warehouses are becoming increasingly individualized and diversified. When there are a wide variety of stored products, the storage volume of each product varies sharply depending on consumer demand. To cope with this, warehouses need to be operated flexibly. On the other hand, products need to be speedily supplied to meet such services as same day delivery by courier operators. Therefore, distribution warehouses must achieve both flexibility and speediness of operations more than ever.

To solve this issue for distribution warehouses, Hitachi set up a team in 2013 by assembling specialists in a variety of fields including AI and robotics from its laboratories. The team started research by observing the actual scenes of logistics operations. In 2014, it decided to investigate a more original and drastic approach rather than simply solving the imminent issues. In other words, we focused on the question of "what future distribution warehouses should be like" to reconsider which directions we should take. This approach led us to the concept of an ideal warehouse in the future, in which both flexibility and speediness are secured by employing AI to supplement the former and using robot technologies to bolster the latter.

UTSUGIThat's right. AI is expected to be applied in a variety of areas. However, it appears that some people do not trust AI. It may be partly because the grounds of analyses made by using AI are not easily seen. For conventional computer programs, human engineers write the algorithms so that the content was understood. In contrast, with machine learning based on AI, the process for the analysis results is totally a black box and no one can see what's going on inside. Even so, do we still have to believe in the analysis results? That's what such people would want to say.

In the case of the logistics cockpit we have developed, it is assumed that the site foremen will view the results of analyses by using AI and make countermeasures. If the site foremen cannot see the grounds of analysis results by using AI, they cannot take responsibility for what they decide. They won't agree with the analysis outcome simply because the results are produced by using AI. Then, how should we make the grounds of the analysis results visible, and how should we set the interface between humans and AI? These have become very important issues to tackle.

As for me, I had been involved in the research of stereoscopic imaging and display devices. Based on this background, I was assigned to investigate the interface that should enable humans to accept the results of analysis by using AI. For the site foremen to make judgments with responsibility on the analysis results by using AI, it is essential to display the key performance indicators (KPIs) on logistics analyzed by using AI as well as the grounds of such analyses in an easy-to-understand format. Taking this into account, we have developed the logistics cockpit as the interface that bridges humans and AI in a friendly manner.

UTSUGIThe logistics cockpit allows the site foremen to grasp what is going on in the warehouse. At the same time, it should allow involved persons to create a consensus as they discuss the data produced using AI, in an effort to understand the problems and take countermeasures. So we had initially thought that the logistics cockpit would require a certain size, because we assumed that several people would see the display at a time. In this regard, the development team repeatedly drew sketches and made paper models to make a larger display size and wider angles. Through trial and error, the final product has come to have a display larger than what we expected. We have also elaborated on the appearance of the logistics cockpit so that it looks innovative and impressive. I believe that the completed model has the atmosphere that is neo-futuristic and grave, as suggested by the word "cockpit."

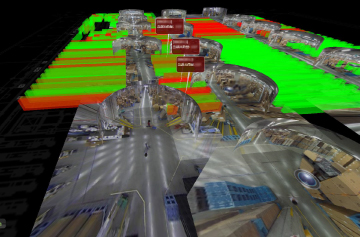

Photo 1: An illustrative photo of the logistics cockpit

UTSUGIThe display shows the video images from cameras within the warehouse. The video images are overlapped by the change status of the KPIs analyzed by AI, indicated in colors. Here, the KPIs judged by using AI to be "problematic" and "not a problem" are shown in different colors. When the site foremen sense any problem from the KPI colors, they can freely see detailed information by enlarging the image or changing the viewpoints as they operate the touch panel, which is part of the interface. With such detailed information, they can implement countermeasures on the spot in the same way as when they walk around the actual site. The logistics cockpit also sends alert, such as "possible problems" detected by using AI. Contrarily, the on-site workers can analyze and compile data for certain portions of the camera images that concern them. We hope that the logistics cockpit can support the interactive part of actions between AI and humans.

In fact, we initially did not think of displaying the camera images on the cockpit. However, when we observed the actual site, we found that people on the frontlines can see what cannot see by using AI, and that what they see is necessary for solving problems. You know, the site foremen are real professionals. They often say, "walk around the warehouse and see things with your own eyes." The scenes on the actual site have many pieces of information. The information volume is so large that you cannot tell which is important unless you know a lot about the actual site. Yet, among such a large amount of information, the site foremen can see important factors that may cause problems.

UTSUGIExactly. Let the site foremen see the figures calculated by AI after watching the camera images, and they can link the figures with the situation in the warehouse. Then, they can take countermeasures against the problems happening in the warehouse.

In some cases, when certain problems have occurred, the site foremen can immediately find the causes and solve them. However, to act on their hunches, they need to actually see the site. It is still difficult for AI technology to determine what is happening in the warehouse at the same level as what the site foremen can determine. That's why we decided to use video images from cameras in place of the eyes of the site foremen actually walking around.

UTSUGIIt does not show the camera images as is. Conventional monitoring cameras provide video images from fixed viewpoints, which make blind spots. In order to eliminate the dead angles, we combined video images from multiple all direction cameras. This makes it possible to monitor any scene within the warehouse from any viewpoint.

First, the all-direction cameras installed on the ceiling of the warehouse take images. The images are then used to create a three-dimensional map. This gives you a bird's-eye view from above of the entire warehouse. Then, the logistics cockpit displays panoramic images, which are produced by synthesizing the video image information from many viewpoints, onto the three-dimensional map. This eliminates the dead angles of the cameras. We call this display method the "2.5 dimensional bird's-eye display technology."

UTSUGIWe call it 2.5 dimensional in the sense that three-dimensional aspects have been achieved by adding video image information to the two-dimensional data. In most cases, a bird's-eye map is easier to understand when it is drawn as an illustration, or in two dimensions. However, an illustration doesn't convey information on the actual site. That's why we added video image information. A picture does not need to fully reproduce the three-dimensional image as long as people can understand it. Rather, such a picture may be easier to understand by humans compared with one that fully reproduces the three-dimensional image.

Photo 2: An illustrative photo of the 2.5 dimensional

bird's eye display technology

In animation, for example, the perspective may sometimes be extremely exaggerated. You see a baseball animation drama in which a pitcher throws a ball, and the ball suddenly swells. You may wonder what type of camera you need to take such a picture! Such an exaggeration doesn't make sense as a three-dimensional expression. Once you see it, however, you feel it is more realistic than an accurate three-dimensional picture. Likewise, in order to link humans and AI in a friendly manner, we aim to make a design that is as easy to understand as a picture drawn by a good illustrator, instead of simply conveying more information than a two-dimensional design.

UTSUGIThe display that gives a neo-futuristic impact was appreciated by people at Hitachi Transport System, who cooperated with us in the research, even more than we expected. They also suggested to us that, as we added video images to be displayed on the screen, we could apply the logistics cockpit for recording life logs of the warehouse that is always changing or we could use it to remotely control overseas warehouses.

Looking ahead, I believe it will become essential to consider how we should select the objects to be analyzed by using AI behind the interface, and what value is created by the suggestions made by using AI. We are currently trying to expand the scope of the analysis objects beyond logistics, while investigating which areas require such suggestions by using AI.

UTSUGITo date, I have conducted research with the thinking that, while there are mathematically correct ways of displaying images, they may not be the right ways to show images that people can easily understand or feel the impact. Going forward, I'd like to pursue how to demonstrate images to have an impact on and provide enjoyment to viewers, and research the scientific methods for reproducing such images in a logical and mathematical manner.

Personally, I want to maintain the stance of reflecting science-fiction thinking on my research activities. In the last ten years or so, many advanced technologies have come down to the consumer market, becoming available and familiar to the general public. These technologies are being combined in an effort to realize the "future world" that had been described in movies and novels in the past. That is the competition being fought now, and I want to keep up with such competition. Going forward, I hope to nourish and deepen my imagination so that it keeps running ahead of the times.