No one can tell when a large-scale disaster will break out. In preparation for such disasters, technologies to protect data have become increasingly important, among other measures. A variety of technologies to protect important data have been developed to date.

In terms of data, however, "protecting" is not everything that is needed as disaster countermeasures. Rather, at the time of a disaster, the system must allow people in the affected area to make the necessary data readily "available."

Based on this perception, Hitachi has proposed a "Metro-Area Distributed Storage System" as a joint research with Tohoku University. The Company works to create a more secure society by offering new technologies.

NAKAMURA Takaki

Senior Researcher

KAMEI Hitoshi

Researcher

(Publication: October 4, 2017)

NAKAMURATo date, the mainstay method in protecting data from disasters has been to replicate data in remote sites in advance to avoid damage from disasters. Even if the original data is damaged by a disaster, the replicated data in remote sites remains safe, and you can use that data to keep your business running.

However, when the Great East Japan Earthquake occurred in 2011, we saw a situation in which people in the damaged areas could not access the data that remained safe from the disaster. Indeed, the replicated data in remote sites was safe. But the Internet was disconnected in the disaster-affected areas. That's why it was not possible to access the remotely located data. Civil servants of local governments on the areas wanted to refer to residents' information and people at medical institutions tried to quickly check patients' information, for example, but in vain.

In 2012, the following year, the Ministry of Education, Culture, Sports, Science and Technology sponsored a project to address these social issues*, with Tohoku University playing a central role in implementing it. Hitachi also participated in the project. It was decided that a new storage system should be developed to make data available immediately after a large-scale disaster occurs.

NAKAMURAThe project has developed a system that replicates data in nearby sites, in addition to a distant site, to protect it from disasters. The development was based on the thinking that data should be kept safe if it is distributed in multiple locations even within single areas.

At the time of the Great East Japan Earthquake, in some cases, a wide-area network connection like the Internet was disrupted, but a local-area network connection like intranet was still available. Based on this fact, the new system distributes data within a narrow scope of areas, such as prefectures and cities, which are connected through intranets. Once a large-scale disaster occurs, the system uses the intranets to restore data within the affected areas and make the data readily accessible.

The conventional concept of protecting data from risks by distributing it into wide-spread areas is called the "wide-area distributed storage system." In contrast, we call the new development the "Metro-Area Distributed Storage System." The idea is to protect data within the same area.

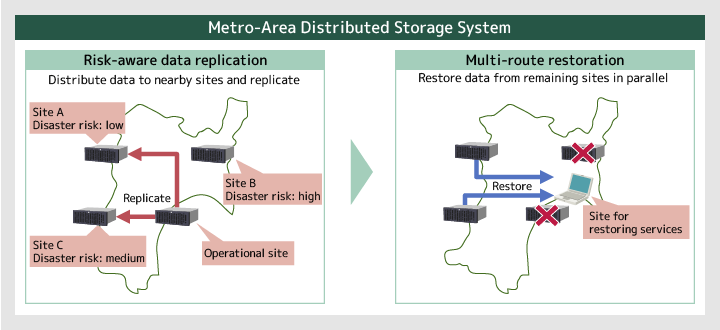

KAMEITwo techniques were developed to achieve the "Metro-Area Distributed Storage System." One is "risk-aware data replication," which is a replication technology to protect data from disasters. The other is "multi-route restoration," which is a technology to skillfully and quickly restore replicated data. They were respectively developed in the first two years and the last two years of the project.

NAKAMURAWhen the project was launched, I was involved from the stage of determining the research direction. In terms of technology, I was mainly engaged in risk-aware data replication, the first of the two technologies.

KAMEII was engaged in the project, starting with the development of multi-route restoration, the second technology. Taking the baton from my predecessor, I was also in charge of a large-scale verification test.

NAKAMURAIt is a technology that replicates and distributes the data you want to protect in many backup sites in neighboring areas as safely as possible.

In traditional replication technologies, remote locations are selected as the backups for replication. This is because, if data is replicated in neighboring areas, there is a high risk of losing the data as the areas are also simultaneously damaged by the disaster. It is an all-or-nothing approach to measuring the risk, considering remote areas to be safe and nearby areas to be risky.

In the risk-aware data replication, we changed the way the risks are assessed. It measures the risks of disasters in more detail for many sites within the same area. For example, certain sites are assessed as highly safe while other areas are judged to be moderately safe or highly risky. The technology then considers how to combine the sites to minimize the risks as much as possible, and select the sites for replicating data. In other words, it distributes data within a limited scope of areas as safely as possible.

In the actual measurement of the risks, we use data that quantitatively assumes the disaster risks of respective areas, such as hazard maps that are open to the public in each area.

KAMEIMulti-route restoration is a technology to restore the data, which is distributed through risk-aware data replication, from the respective sites in parallel and at high speed.

With the risk-aware data replication technology, we have safely replicated data within certain areas. So the data should be readily used within the areas, even if they are affected by disasters. However, the replicated data is so huge that it would take long time if restored as is. In the time of disasters in particular, the network speed will slow down extremely due to access concentration and other factors. The speed of restoring will also become remarkably slow.

That's why we proposed multi-route restoration, in which the data distributed to many sites is restored in parallel. Restoring data from a single site may take time. However, if the data is restored from two, four, or eight sites in parallel, the time required for restoring could be as short as one half, one fourth or one eighth the time, respectively.

Figure 1: Structure of the Metro-Area Distributed Storage System

NAKAMURAWell, I believe the idea itself is easy to understand, but materializing it was very difficult.

KAMEIIf you ask, I would say I encountered more hardships in constructing the system and operating it. The original system had around 20 storage devices, but we expanded it to include more than 100 devices at a time for verification test purposes. At the design stage, I was sure that the system would work without any problems. However, it didn't go as we had expected at all. We had to break and re-create the system we had constructed, and we prepared tools to monitor the trouble points.

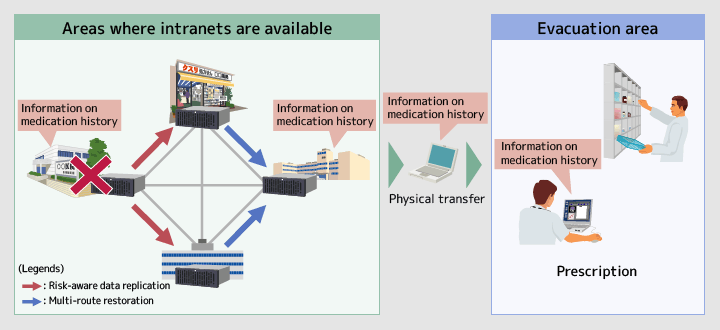

KAMEIYes, that's right. We conducted a verification test in November 2016, assuming a large-scale disaster, in cooperation with pharmacists in the Miyagi Prefecture Pharmaceutical Association. Students of Tohoku University also participated in the test, as the research was conducted jointly with the university.

NAKAMURAThe test was open to the public, and was a one-shot deal. We were not allowed to fail. That was really tough for us.

KAMEIBefore the test, we assumed a variety of scenarios and conducted preparatory practices in stages. I practiced with the students and the pharmacists but did so even alone. We made preparations fairly elaborately before the test, but reflecting back on the actual test, I was in a cold sweat despite such preparations. Looking back though, it was a good experience for me.

KAMEIThe test involved the "prescription system" that is generally used at pharmacies. Assuming a large-scale disaster, the test was to reconstruct the system and make it usable at evacuation shelters. The prescription system holds records of the medicines prescribed to patients and the patients' symptoms.

First, the data in the prescription system was divided in advance, and distributed to many sites through the risk-aware data replication technology. Then, assuming that a large-scale disaster broke out, the entire prescription system was restored through the multi-route restoration technology so that the system could be used at the evacuation shelters. Because the test assumed a disaster, we intentionally created a situation in which the networks had delays before restoring the data. Even so, we confirmed that the data was restored at almost the same speed as restoring in normal operations. We asked people from the Pharmaceutical Association to actually use the restored prescription system to simulate their prescription activities at the time of a disaster.

NAKAMURAIt may naturally happen that even intranets won't work after a large-scale disaster occurs. For the areas where intranets are interrupted, we physically transfer terminals there for complementation. In the verification test, we made the physical transfer as well, assuming that there were areas where intranets were usable to some extent and areas where intranets were interrupted and no communication was possible.

Figure 2: Verification Test with the Miyagi Prefecture Pharmaceutical Association

NAKAMURAThe test was fairly warmly accepted by the pharmacists.

They had actually made prescriptions in the affected areas, and really experienced hardships that they would in an actual disaster. For example, when the pharmacists asked patients at the evacuation shelters what medicine they usually took, they would reply, "something white and round." So the pharmacists asked questions such as the type of packages and for other information they could remember, and prescribed medicines by checking what they heard from the patients. That's what they told us.

For the questionnaire conducted after the test, there were many positive replies saying that they "want to actually use this system" and that they should be able to "make appropriate prescriptions even in disasters if the symptoms and medication history data can be restored like this."

KAMEIOn the day of the test, I was with the students to operate the system in the backyard. So students often made technical comments on the system itself. Some comments were fairly to the point. In such ways, we were stimulated by many people, and it allowed us to create a good system, I believe.

NAKAMURAWe had many difficulties, but I think it was good to have the test. Because there are things you cannot find out unless you actually put it into practice.

NAKAMURAI think the system will be applicable to risks other than large-scale disasters if we can satisfy the two points of quantifying the risks and there being many sites within the relevant areas.

For example, the system may be applied to the risk of blackouts. We seldom have blackouts in Japan, but there are areas in other countries where blackouts tend to happen as the power supply is unstable. In such areas, the system may be utilized for protecting data from blackouts by quantifying the probability of blackouts. As such, I hope to expand the scope of the research so that it is useful in situations other than large-scale disasters, such as in the risk of failures that happen in normal times.

KAMEIThe newly developed system is a prototype for verifying the principles, and its operations are rather complicated. If you ask me whether the system can be readily used by people who have little knowledge of IT, I would say it is a bit difficult right now. I want to refine the system so that many people can use it fairly easily.

As large-scale disasters are forecasted to happen in various areas, I think we have to prepare for disasters. We have developed technologies to protect data from disasters but, if the system remains difficult to use, it won't work when it is needed. We will work to make the system easier to use and readier for application. By doing so, I hope to refine it into a system that can securely protect what must be protected.

NAKAMURADuring the development, I was transferred to Tohoku University for the work. Partly because of this, I have a different perspective for my goals. I feel it was really good that Hitachi made the research jointly with the university. Corporations and universities each have their own advantages, and the joint research successfully incorporated both. Joint research with a university makes it possible to achieve what research by a corporation alone cannot attain. That's what I strongly felt through the project. Going forward, I hope to continue research of this type.