Diagnostic ultrasound equipment produces images of the fetus in the womb. Lately there has been increasing demand for clearer fetus images not only from doctors who perform diagnosis with the equipment but also from expecting mothers who want to see how their babies are growing inside of them. To address this demand Hitachi has sought to produce images that look cute rather than realistic to be closer to what the expecting mothers visualize as "a cute baby."

(Publication: July 24, 2015)

NOGUCHIUltrasound is sound with a frequency outside of the human range of hearing. Diagnostic ultrasound equipment emits this inaudible sound toward the human body. It measures the positions and forms of organs, etc. based on the ultrasonic echoes returning from the body to create images. It is widely used, primarily in the medical field. In general, diagnostic ultrasound equipment generates black-and-white cross-sectional images. More recently, however, image processing technologies have made it possible for the equipment to display stereoscopic color images.

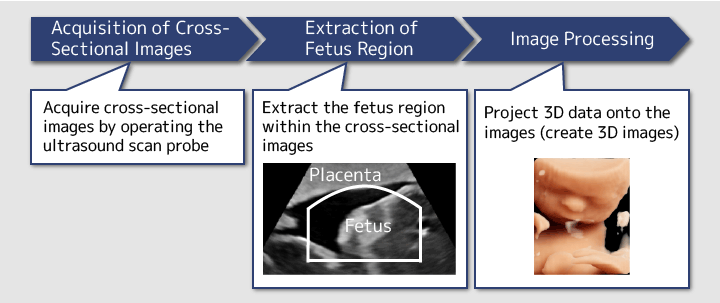

For the latest development, we've conducted research on the diagnostic ultrasound equipment used by obstetricians and gynecologists to observe the status of the fetus. Let me explain the process by which 3D fetus images are displayed. First, a device called a probe is placed on the belly of the pregnant woman. It emits ultrasound to obtain cross-sectional images. After obtaining the images, the fetus region within the cross-sectional images is extracted. Finally, image processing is applied to add tint and 3D effects to create images of the fetus.

Figure 1: Process to obtain stereoscopic images using diagnostic ultrasound equipment

SHIBAHARAThe images generated by conventional diagnostic ultrasound equipment are not very attractive in terms of color, texture and 3D effects, so for a long time people have had to settle for unclear images. Only recently has a trend to improve picture quality emerged, and Hitachi also began research on image processing to pursue clearer, more attractive images.

When we started the research we really wanted to create realistic images indistinguishable from actual photos. However in pursuing realism there was the possibility that we would end up mimicking what our competitors were doing, so eventually we decided to pursue cuter images that would please the expecting mothers, as we reflected that the development was aimed at serving pregnant women.

In the end, we believe this approach turned out to be the right one. During the research we had the opportunity to see photos of actual fetuses. Their skin is transparent because of the lack of melanin pigment and blood vessels show, so pursuing realism could result in something a bit unsettling. That's why we became convinced that creating realistic images is not always the best.

SHIBAHARAExactly. We began our research by asking, "What is cuteness?" While we wanted to create images that would please expecting mothers, we had no idea how to define "cute" or "pleasing."

Basically, in stereoscopic images of fetuses, the element that can be adjusted is color. Accordingly, in our research, we focused on the impression given by skin color. We decided to utilize a technology called "machine learning," a subject of artificial intelligence research, to quantify how "cuteness" or "preferred-ness" changes in accordance with changes in skin color.

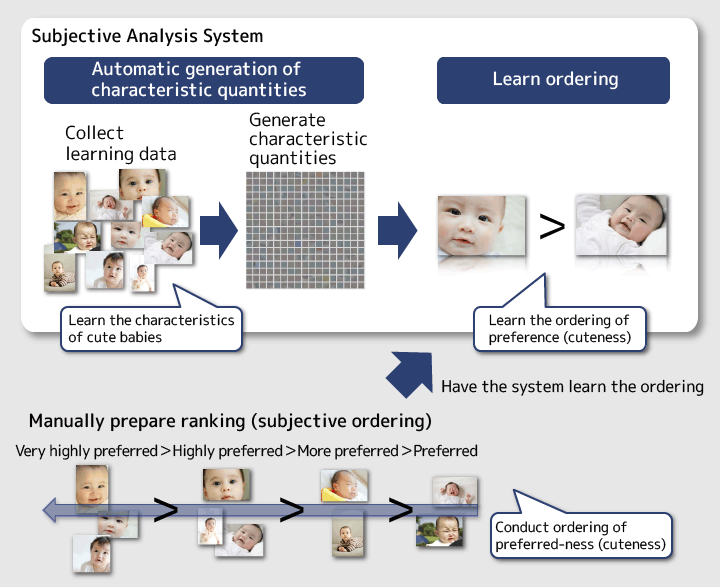

NOGUCHIOur idea was to create a Subjectivity Analysis System which would numerically analyze people's subjective judgment. The first step was to have the system learn what baby characteristics made them look cute. Search the Internet for "cute baby" and you find many images of cute babies that might appear in movies. We had the system learn as many such baby images as possible.

SHIBAHARANext, we had the system learn the ordering of the images in terms of cuteness. The ordering represented human preference; for example, image 1 looking cuter than image 2, and the results of the ordering were learned by the system.

Figure 2: How the subjectivity analysis system learns the subjective judgments (cuteness)

SHIBAHARAAfter having the subjectivity analysis system learn "cuteness," we had it numerically assess how cute the stereoscopic images of fetuses we prepared by adjusting skin color were. If the resulting figures were higher, it meant the adjustment was an improvement, and vice versa. Eventually, we extracted several patterns of skin colors that might appear cuter and incorporated them into the diagnostic ultrasound equipment.

NOGUCHIWhat is interesting with this research is that when we had the machine learn cuteness we used images of babies several months after they were born instead of images of fetuses or newborn babies.

As pregnant women have never seen the actual fetuses, they imagine the babies after they are born. So we had the system learn expecting mothers' ideal as the data on which it based its cuteness assessment. This is the gist of our research.

SHIBAHARABecause it is difficult for people to assess subjective feelings. If we had tried to find the skin colors considered cute by human sensation we might have ended up as something like color sommeliers. We would change the color slightly and ask, is this cute, is that cute, and not be able to tell anything. As people make adjustments they lose sight of their standards, but machines don't have this problem. By incorporating subjective human judgment into the machine, the assessment results turned out to be nearer to what human subjectivity would produce.

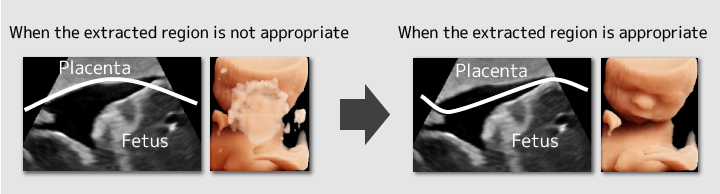

NOGUCHIAs part of the processing prior to 3D fetus image generation, we developed technology to automatically extract the fetus region within the cross-sectional images. Conventionally, doctors manually draw boundary lines to extract the fetus region. That only allows them to draw simple curved lines and, if they fail to properly extract the region, cause problems such as part of the fetus not appearing in the stereoscopic images or part of the placenta appearing and concealing the fetus.

SHIBAHARASuch images make expecting mothers uneasy and wonder if the baby is growing alright or has something unusual, when in reality, those concerns are unnecessary as doctors are performing diagnosis and the regions extracted are simply inappropriate. If the fetus region is automatically extracted in an appropriate manner, expecting mothers can be reassured and doctors can operate the system more easily.

Figure 3: Schematic images of extracting the fetus region

NOGUCHIIn the cross-sectional images obtained by the diagnostic ultrasound equipment, the placenta and the fetus look white and the amniotic fluid looks dark. In order to extract the fetus region, a boundary line must be drawn in the dark sections between the placenta and the fetus. First, the fetus region is roughly enclosed by a manually drawn line. Then the minute unevenness is automatically smoothed out. The cross-sectional images have a low resolution, making the boundaries vague in certain places. However, the system can draw the boundary line appropriately with a high degree of accuracy. That is one of the characteristics of the system.

NOGUCHIWe had trouble coming up with an algorithm for drawing the boundary lines at the most appropriate places in areas where the boundaries are vague. Humans can draw appropriate boundary lines even in vague areas but machines are not very good at this. If a step-by-step method of drawing the line where the boundary is clear is employed, machines are likely to draw a wrong line in the sections where the boundary is ambiguous.

Therefore we came up with a boundary line algorithm that considers the entire balance rather than merely a narrow scope. I think people naturally tend to think this way. In other words, the system takes a wider perspective to cover the entire image and find appropriate positions for the boundary line.

Moreover, this algorithm must not fail because if boundary lines are drawn in the wrong places, part of the fetus body may be lost in the images, making expecting mothers uneasy when they see them. We felt pressure to ensure that appropriate boundary lines are drawn as consistently as possible. In order to minimize the number of failures, we also endeavored to create an error judgment function which, when the boundary lines drawn by the machine may have failed, automatically goes back to the boundary lines before the possible failure.

NOGUCHIWe asked doctors to use the developed product and received favorable feedback from them because they were able to obtain clearer images more quickly than before.

The function to automatically extract the fetus region can be switched on and off. One doctor, who operated the system without noticing that the function was on, told us that the automatic extraction function was not necessary as the images looked clear. But things didn't go so well when the automatic extraction function was turned off. So the doctor agreed that this function is necessary.

SHIBAHARAHowever good the function is, it won't be used if operating it is inconvenient or if it causes failures. So when we heard about this we were happy to learn that a doctor was able to use the automatic extraction function without realizing it and without stress.

NOGUCHIIn this latest research we worked toward "cute" and "preferred" image processing. Going forward, while continuing research to improve the system's presentation technologies and operability, we want to develop technologies that help doctors conduct treatment and diagnosis. For example, we envisage technology that automatically measure the weight and height of the baby from its images and technology that suggests to doctors possibilities of danger, including illness, to the baby as early as possible. In particular, the number of obstetricians is rather limited in Japan and a single doctor bears a heavy workload. Our next goal is to produce products that reduce this load as much as possible and contribute directly to medical treatment.

Development of such technologies should reduce the possibility of overlooking diseases of babies, which is also beneficial to the expecting mothers who are the patients.

SHIBAHARADoctors have cutting-edge technologies in medicine, and we have cutting-edge technologies in information science. However, we do not know much about each other's fields. We have technologies as tools but do not know how they should be utilized. Doctors have something they want to perform but do not know how this can be realized through technologies in information science. Our technologies gain value medically only when the views of doctors are reflected in them. We believe that by contacting the doctors on the medical frontline we have been able to collaborate extremely well, enabling us to contribute to both medicine and information science. Going forward, we would like to continue feeding such firsthand, frontline views into our products.